-

Light-field imaging—a technique facilitating volumetric imaging within a single frame—has emerged as the preferred tool for observing three-dimensional dynamic phenomena1,2. Light-field imaging has extensive applications, ranging from microscopic imaging of biological tissue structures3,4 to macroscopic imaging of flow dynamics5,6. However, the inherent trade-off between lateral resolution and axial information coverage results in a low resolution that limiting the widespread application of the light-field imaging technique7. While optimising and innovating light-field imaging optical systems can enhance their resolutions8−10, these steps inevitably increase the cost and complexity of the systems, thereby further hindering the application of this technique. In recent years, numerous methods have been proposed to improve the resolution of such systems through image reconstruction software designing, which mostly relies on deep learning (DL) approaches based on convolutional neural networks (CNNs)3,11,12. These methods have successfully enhanced the resolution of light-field microscopy, enabling high-speed three-dimensional imaging of rapid biological processes, such as neuronal activity and cardiac blood flow. However, DL methods involve additional computational costs and result in large reconstruction errors13.

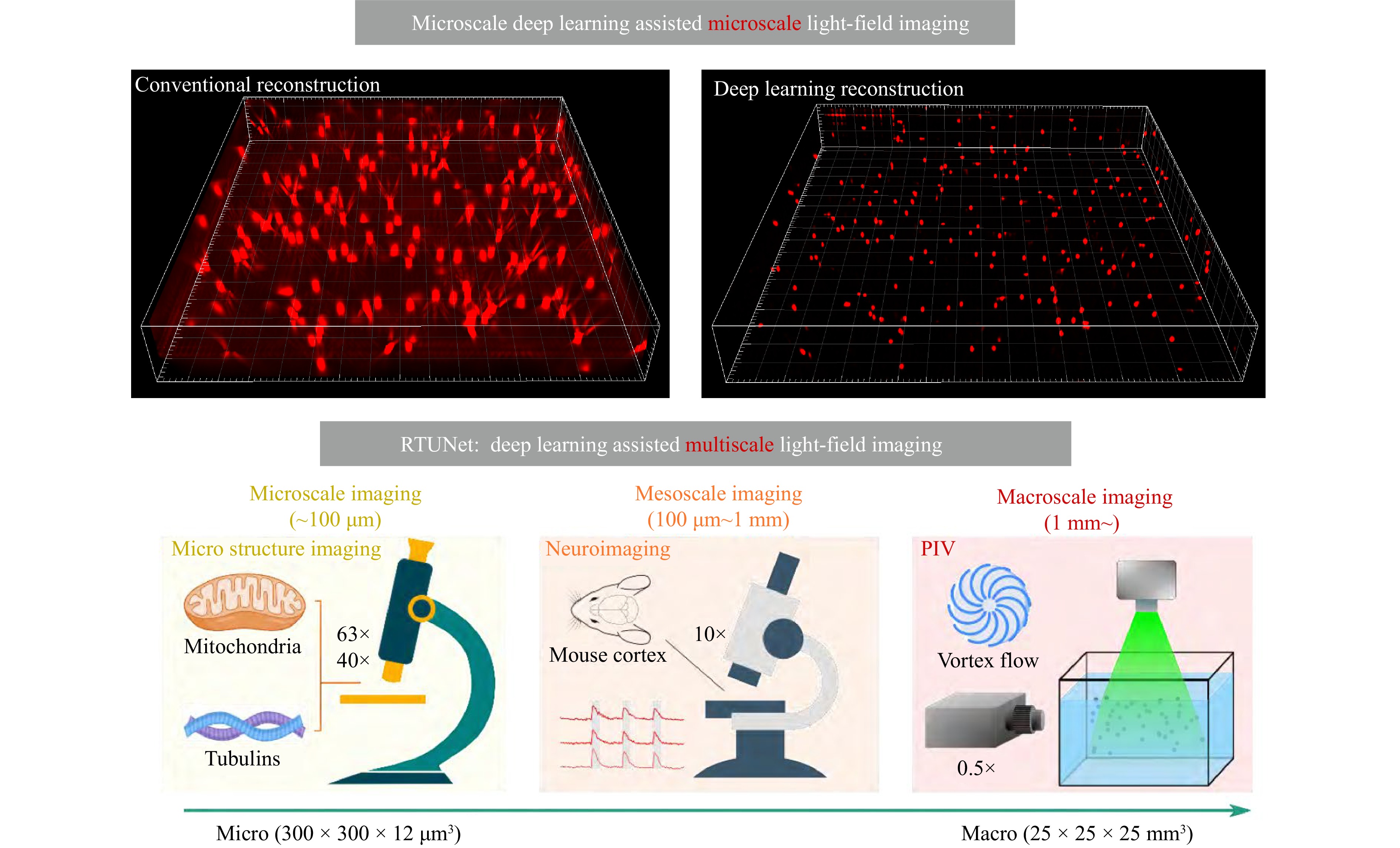

Currently, the feasibility of DL-assisted light-field imaging methods has only been demonstrated at the microscale, and their suitability at the meso- and macroscales remains unclear. However, a notable advantage of light-field imaging is its remarkable versatility in multiscale imaging. The absence of software for high-resolution image reconstruction at large scales hinders the application of light-field imaging to a broad range of research fields (Fig. 1). Moreover, these DL-based methods rely on traditional linear loss functions for network training, and thus require extensive manual fine-tuning to design the loss function and collect training data. In contrast, the adaptive nonlinear loss functions of generative adversarial networks (GANs) can be adapted and tuned according to the data during the training process, leading to more visually satisfactory results14. However, GANs are rarely applied in high-resolution light-field image reconstruction. Therefore, developing a light-field image reconstruction method, with a high resolution, a high efficiency, and, most importantly, general applicability to any scale, is crucial for advancing the application of light-field imaging to a wider range of domains.

Fig. 1 Concept of DL-assisted light-field imaging, and the extension of this work to multiscale light-field imaging.

In a newly published paper in Light: Science & Applications, Depeng Wang’s group from Nanjing University of Aeronautics and Astronautics, China, proposed an approach—called the real-time and universal network (RTU-Net)—to realise the aforementioned goals for light-field imaging15. The RTU-Net uses raw light-field images as inputs and reconstructs high-resolution volumetric images as outputs. The GAN-based RTU-Net has one generator and one discriminator, which allow three optimisation strategies: (i) training the discriminator to accurately distinguish between fake and real input data, (ii) training the generator to produce an output that bears a strong similarity to the ground truth, and (iii) improving the generator using the discriminator as the basis for optimisation. Using this approach, Wang’s group demonstrated the high-resolution light-field image reconstruction capability of the RTU-Net at different scales, including the microscale (~300 µm), mesoscale (~1 mm), and macroscale (~3 cm).

Specifically, at the microscale, the RTU-Net outperformed previous methods in terms of spatial resolution and reconstruction artefacts of tubulins and mitochondria. At the mesoscale, the RTU-Net revealed the activity traces of neurons from a reconstructed mouse cortex volume, and the results strongly resembled the ground truth. At the macroscale, light-field particle imaging velocimetry measurements (which prove high-quality reconstruction of flow dynamics) performed by the authors showed that the RTU-Net improved particle localisation resolution by 3-4 times than reconstructed by conventional refocusing approach. RTU-Net reportedly exhibits superior generalisation capability compared to previous end-to-end networks, increases the reconstruction speed by several orders of magnitude, shows the best performance (in terms of various evaluation parameters, such as Peak Signal-to-Noise Ratio, Learned Perceptual Image Patch Similarity, and Mean Squared Error) among all the compared methods, and offers excellent robustness and reconstruction accuracy. The results reported by Wang’s group underscore RTU-Net as a versatile tool that can effectively bridge microscale-to-macroscale light-field image reconstruction.

The proposed GAN-based RTU-Net-assisted light-field image reconstruction method offers high resolution, robustness, high efficiency, and multiscale applicability. Moreover, because the RTU-Net can be trained on small datasets, overfitting can be mitigated by continuously reducing the data size. Despite the urgent need for cross-scale imaging, the differences in imaging data across these scales have not been distinguished in the current study by Wang et al. Future studies should focus on this aspect, and adaptive techniques that can intelligently adjust DL networks to accommodate different scales should be developed. Although the RTU-Net has only been applied to light-field imaging, appropriate adjustments to the network structure cam expand its applicability to diverse domains. A deconvolution or debackground module can be applied to the RTU-Net to process noisy data16. Moreover, improved preprocessing/postprocessing strategies can impart high resolution capabilities to the RTU-Net17, and a more powerful GPU will contribute to increasing the network’s training speed. In addition, collection of more high-quality training data and incorporation of network architectures with high data scalability, such as swin transformers18, can significantly boost the performance of DL-assisted light-field imaging.

The RTU-Net, with its high resolution, robustness, high efficiency, and general applicability from the microscale to the macroscale, is expected to become a powerful tool for high-resolution multiscale volumetric imaging of dynamics. It will also inspire researchers to explore new imaging and data processing techniques that can be applied at the microscale as well as cross-scales.

Bridging microscale and macroscale light-field image reconstruction using ‘real-time and universal network’

- Light: Advanced Manufacturing , Article number: (2025)

- Received: 07 April 2025

- Revised: 19 June 2025

- Accepted: 25 July 2025 Published online: 20 August 2025

doi: https://doi.org/10.37188/lam.2025.060

Abstract: A real-time and universal network (RTU-Net) has been proposed for multiscale, real-time, high-resolution reconstruction of light-field images. The proposed RTU-Net can be applied to various application domains to gain insights into volumetric imaging from microscale to macroscale.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article′s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article′s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

DownLoad:

DownLoad: